At the end of last year, I had the privilege of attending a session organised by Red Hat where they shared their Asia Pacific roadmap with the tech analyst community. The company’s approach of providing a hybrid cloud application platform centred around OpenShift has worked well with clients who favour a hybrid cloud approach. Going forward, Red Hat is looking to build and expand their business around three product innovation focus areas. At the core is their platform engineering, flanked by focus areas on AI/ML and the Edge.

The Opportunities

Besides the product innovation focus, Red Hat is also looking into several emerging areas, where they’ve seen initial client success in 2023. While use cases such as operational resilience or edge lifecycle management are long-existing trends, carbon-aware workload scheduling may just have appeared over the horizon. But two others stood out for me with a potentially huge demand in 2024.

GPU-as-a-Service. GPUaaS addresses a massive demand driven by the meteoric rise of Generative AI over the past 12 months. Any innovation that would allow customers a more flexible use of scarce and expensive resources such as GPUs can create an immediate opportunity and Red Hat might have a first mover and established base advantage. Particularly GPUaaS is an opportunity in fast growing markets, where cost and availability are strong inhibitors.

Digital Sovereignty. Digital sovereignty has been a strong driver in some markets – for example in Indonesia, which has led to most cloud hyperscalers opening their data centres onshore over the past years. Yet not the least due to the geography of Indonesia, hybrid cloud remains an important consideration, where digital sovereignty needs to be managed across a diverse infrastructure. Other fast-growing markets have similar challenges and a strong drive for digital sovereignty. Crucially, Red Hat may well have an advantage where onshore hyperscalers are not yet available (for example in Malaysia).

Strategic Focus Areas for Red Hat

Red Hat’s product innovation strategy is robust at its core, particularly in platform engineering, but needs more clarity at the periphery. They have already been addressing Edge use cases as an extension of their core platform, especially in the Automotive sector, establishing a solid foundation in this area. Their focus on AI/ML may be a bit more aspirational, as they are looking to not only AI-enable their core platform but also expand it into a platform to run AI workloads. AI may drive interest in hybrid cloud, but it will be in very specific use cases.

For Red Hat to be successful in the AI space, it must steer away from competing straight out with the cloud-native AI platforms. They must identify the use cases where AI on hybrid cloud has a true advantage. Such use cases will mainly exist in industries with a strong Edge component, potentially also with a still heavy reliance on on-site data centres. Manufacturing is the prime example.

Red Hat’s success in AI/ML use cases is tightly connected to their (continuing) success in Edge use cases, all build on the solid platform engineering foundation.

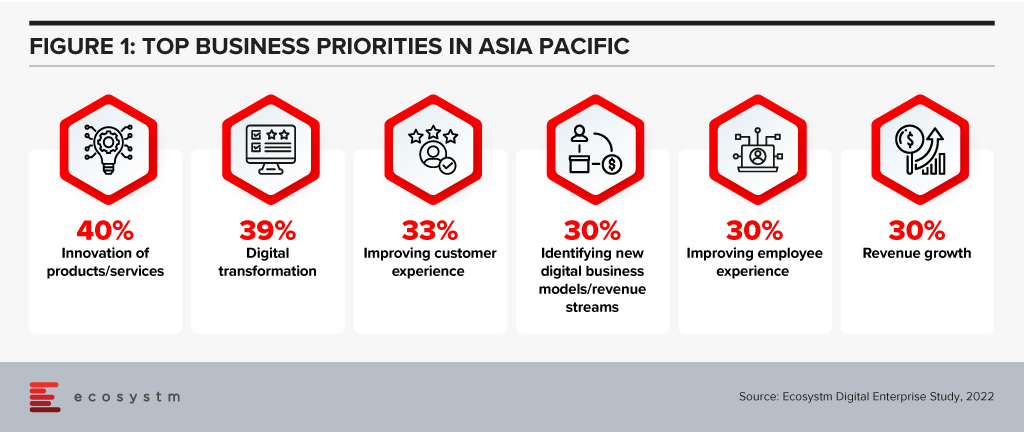

Life never gets any easier for the digital and information technology teams in organisations. The range and reach of the different technologies continue to open new opportunities for organisations that have the foresight and strategy to chase them. Improving offers for existing customers and reaching new segments depend on the organisation’s ability to innovate.

But the complexity of the digital ecosystem means this ability to innovate will be heavily constrained, causing improvements to take longer and cost more in many cases. Addressing the top business priorities expressed in the Ecosystm Digital Enterprise Study, 2022, will need tech teams to look to simplify as well as add features.

Complexity is Not Just an IT Issue

Many parts of an organisation have been making decisions on implementing new digital capabilities, particularly those involved in remote working. Frequently, the IT organisation has not been involved in the selection, implementation and use of these new facilities.

The number of start-up organisations delivering SaaS has continued to explode. A particular area has been the expansion of co-creation tools used by teams to deliver outcomes. In many cases, these have been introduced by enthusiastic users looking to improve their immediate working environment without the understanding of single-sign-on requirements, security and privacy of information or the importance of backup and business continuity planning.

SaaS tools such as Notion, monday.com and ClickUp (amongst many, many others), are being used to coordinate and manage teams across organisations of all sizes. While these are all cloud services, the support and maintenance of them ultimately will fall to the IT organisation. And they won’t be integrated at all with the tools the IT organisation uses to manage and improve user experience.

Every new component adds to the complexity of the tech environment – but with that complexity comes increased dependencies between components, which slows an organisation’s ability to adapt and evolve. This means each change needs more work to deliver, costs increase, and it takes longer to deliver value.

And this increasing complexity causes further problems with cybersecurity. Without regular attention, legacy systems will increase the attack surface of organisations, making it easier to compromise an organisation’s environment. At a recent executive forum with CISOs, attendees rated the risks caused by their legacy systems as their most significant concern.

An organisation’s leadership needs to both simplify and advance their organisation’s digital capabilities to remain competitive. This balance should not be left to the IT organisation to achieve as they will not be able to deliver both without wider support and recognition of the problems.

Discriminate on Differentiating Skills

One thing we can be sure of is that we won’t be able to employ all the skills we need for our future capabilities. We are not training enough people in the skills that we need now and for the future, and the range of technologies continues to expand, increasing the number of skills that we will need to keep an organisation running.

Most organisations are not removing or replacing ageing systems, preferring to keep them running at an apparently low cost. Often these legacy systems are fully depreciated, have low maintenance costs and have few changes made to them, as other areas of the organisation offer better investment options. But this also means that the old skills remain necessary.

So organisational leaders are adding new skills requirements on top of old, with the older skills being less attractive with so many new languages, frameworks and databases becoming available. Wikipedia has a very long list of languages that have been developed over the years. Some from the 1950s, like FORTRAN and LISP, continue to be used today.

Organisations will not be in a position to employ all the skills it needs to implement, develop and maintain for its digital infrastructure and applications. The choice is going to be which skills are most important to an organisation. This selection needs to be very discriminating and focus on differentiating skills – those that really make a difference within your ecosystem, particularly for your customers and employees.

Organisations will need a great partner who can deliver generic skills and more services. They will have better economies of scale and skill and will free management to attend to those things most important to customers and employees.

Hybrid Cloud has an Edge

Almost every organisation has a hybrid cloud environment. This is not a projection – it has already happened. And most organisations are not well equipped to deal with this situation.

Organisations may not be aware that they are using multiple public clouds. Many of the niche SaaS applications used by an organisation will use Microsoft Azure, AWS or GCP, so it is highly likely organisations are already using multiple public clouds. Not to mention the offerings from vendors such as Oracle, Salesforce, SAP and IBM. IT teams need to be able to monitor, manage and maintain this complex set of environments. But we are only in the early stages of integrating these different services and systems.

But there is a third leg to this digital infrastructure stool that is becoming increasingly important – what we call “the Edge” – where applications are deployed as part of the sensors that collect data in different environments. This includes applications such as pattern recognition systems embedded in cameras so that network and server delays cannot affect the performance of the edge systems. We can see this happening even in our homes. Google supports their Nest domestic products, while Alexa uses AWS. Not to mention Amazon’s Ring home security products.

With the sheer number of these edge devices that already exist, the complexity it adds to the hybrid environment is huge. And we expect IT organisations to be able to support and manage these.

Simplify, Specialise, Scale

The lessons for IT organisations are threefold:

- Simplify as much as possible while you are implementing new features and facilities. Retiring legacy infrastructure elements should be consistently included in the IT Team objectives. This should be done as part of implementing new capabilities in areas that are related to the legacy.

- Specialise in the skills that are the differentiators for your organisation with its customers and employees. Find great partners who can provide the more generic skills and services to take this load off your team.

- Scale your hybrid management environment so that you can automate as much of the running of your infrastructure as possible. You need to make your IT Team as productive as possible, and they will need power tools.

For IT vendors, the lessons are similar.

- Simplify customer offers as much as possible so that integration with your offering is fast and frugal. Work with them to reduce and retire as much of their legacy as possible as you implement your services. Duplication of even part of your offer will complicate your delivery of high-quality services.

- Understand where your customers have chosen to specialise and look to complement their skills. And consistently demonstrate that you are the best in delivering these generic capabilities.

- Scale your integration capabilities so that your customers can operate through that mythical single pane of glass. They will be struggling with the complexities of the hybrid infrastructure that include multiple cloud vendors, on-premises equipment, and edge services.

–

Cities worldwide have been facing unexpected challenges since 2020 – and 2022 will see them continue to struggle with the after-effects of COVID-19. However, there is one thing that governments have learnt during this ongoing crisis – technology is not the only aspect of a Cities of the Future initiative. Besides technology, Cities of the Future will start revisiting organisational and institutional structures, prioritise goals, and design and deploy an architecture with data as its foundation.

Cities of the Future will focus on being:

- Safe. Driven by the ongoing healthcare crisis

- Secure. Driven by the multiple cyber attacks on critical infrastructure

- Sustainable. Driven by citizen consciousness and global efforts such as the COP26

- Smart. Driven by the need to be agile to face future uncertainties

Read on to find out what Ecosystm Advisors, Peter Carr, Randeep Sudan, Sash Mukherjee and Tim Sheedy think will be the leading Cities of the Future trends for 2022.

Click here to download Ecosystm Predicts: The Top 5 Trends for Cities of the Future in 2022

AI has become intrinsic to our personal lives – we are often completely unaware of technology’s influence on our daily lives. For enterprises too, tech solutions often come embedded with AI capabilities. Today, an organisation’s ability to automate processes and decisions is often dependent more on their desire and appetite for tech adoption, than the technology itself.

In 2022 the key focus for enterprises will be on being able to trust their Data & AI solutions. This will include trust in their IT infrastructure, architecture and AI services; and stretch to being able to participate in trusted data sharing models. Technology vendors will lead this discussion and showcase their solutions in the light of trust.

Read what Ecosystm analysts, Darian Bird, Niloy Mukherjee, Peter Carr and Tim Sheedy think will be the leading Data & AI trends in 2022.

Click here to download Ecosystm Predicts: The Top 5 Trends for Data & AI in 2022 as PDF

One of the biggest impacts of the pandemic has been the uptick in cloud adoption. Ecosystm research shows that more than half the organisations are either building cloud native applications or have a Cloud-First strategy. Cloud infrastructure, platforms and software became a key enabler of the business agility and innovation that organisations needed to survive and succeed.

However, as organisations look to become data-driven and digital, they will require seamless access to their data, irrespective of where they are generated (enterprise systems, IoT devices or AI solutions) and where they are stored (public cloud, Edge, on-premises or data centres) to unlock the full value of the data and deliver the insights needed. This will shape the Cloud and Data Centre ecosystem in 2022.

Read on to find out what Ecosystm Analysts, Claus Mortensen, Darian Bird, Peter Carr and Tim Sheedy think will be the leading Cloud & Data Centre trends in 2022.

Click here to download Ecosystm Predicts: The Top 5 Trends for Cloud & Data Centre in 2022 as PDF

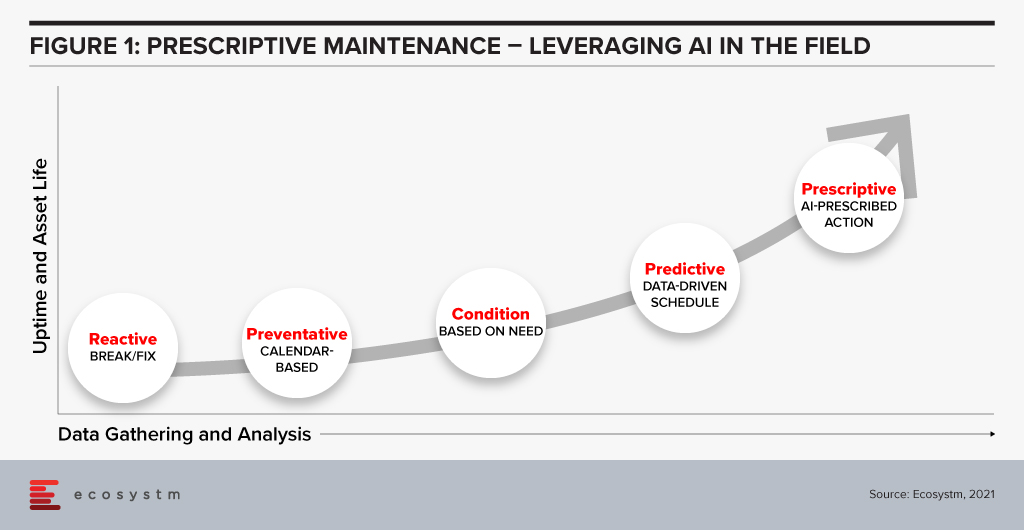

The rollout of 5G combined with edge computing in remote locations will change the way maintenance is carried out in the field. Historically, service teams performed maintenance either in a reactive fashion – fixing equipment when it broke – or using a preventative calendar-based approach. Neither of these methods is satisfactory, with the former being too late and resulting in failure while the latter is necessarily too early, resulting in excessive expenditure and downtime. The availability of connected sensors has allowed service teams to shift to condition monitoring without the need for taking equipment offline for inspections. The advent of analytics takes this approach further and has given us optimised scheduling in the form of predictive maintenance.

The next step is prescriptive maintenance in which AI can recommend action based on current and predicted condition according to expected usage or environmental circumstances. This could be as simple as alerting an operator to automatically ordering parts and scheduling multiple servicing tasks depending on forecasted production needs in the short term.

Prescriptive maintenance has only become possible with the advancement of AI and digital twin technology, but imminent improvements in connectivity and computing will take servicing to a new level. The rollout of 5G will give a boost to bandwidth, reduce latency, and increase the number of connections possible. Equipment in remote locations – such as transmission lines or machinery in resource industries – will benefit from the higher throughput of 5G connectivity, either as part of an operator’s network rollout or a private on-site deployment. Mobile machinery, particularly vehicles, which can include hundreds of sensors will no longer be required to wait until arrival before the condition can be assessed. Furthermore, vehicles equipped with external sensors can inspect stationary infrastructure as it passes by.

Edge computing – either carried out by miniature onboard devices or at smaller scale data centres embedded in 5G networks – ensure that intensive processing can be carried out closer to equipment than with a typical cloud environment. Bandwidth hungry applications, such as video and time series analysis, can be conducted with only meta data transmitted immediately and full archives uploaded with less urgency.

Prescriptive Maintenance with 5G and the Edge – Use Cases

- Transportation. Bridges built over railway lines equipped with high-speed cameras can monitor passing trains to inspect for damage. Data-intensive video analysis can be conducted on local devices for a rapid response while selected raw data can be uploaded to the cloud over 5G to improve inference models.

- Mining. Private 5G networks built-in remote sites can provide connectivity between fixed equipment, vehicles, drones, robotic dogs, workers, and remote operations centres. Autonomous haulage trucks can be monitored remotely and in the event of a breakdown, other vehicles can be automatically redirected to prevent dumping queues.

- Utilities. Emergency maintenance needs can be prioritised before extreme weather events based on meteorological forecasts and their impact on ageing parts. Machine learning can be used to understand location-specific effects of, for example, salt content in off-shore wind turbine cables. Early detection of turbine rotor cracks can recommend shutdown during high-load periods.

Data as an Asset

Effective prescriptive maintenance only becomes possible after the accumulation and integration of multiple data sources over an extended period. Inference models should understand both normal and abnormal equipment performance in various conditions, such as extreme weather, during incorrect operation, or when adjacent parts are degraded. For many smaller organisations or those deploying new equipment, the necessary volume of data will not be available without the assistance of equipment manufacturers. Moreover, even manufacturers will not have sufficient data on interaction with complementary equipment. This provides an opportunity for large operators to sell their own inference models as a new revenue stream. For example, an electrical grid operator in North America can partner with a similar, but smaller organisation in Europe to provide operational data and maintenance recommendations. Similarly, telecom providers, regional transportation providers, logistics companies, and smart cities will find industry players in other geographies that they do not naturally compete with.

Recommendations

- Employing multiple sensors. Baseline conditions and failure signatures are improved using machine learning based on feeds from multiple sensors, such as those that monitor vibration, sound, temperature, pressure, and humidity. The use of multiple sensors makes it possible to not only identify potential failure but also the reason for it and can therefore more accurately prescribe a solution to prevent an outage.

- Data assessment and integration. Prescriptive maintenance is most effective when multiple data sources are unified as inputs. Identify the location of these sources, such as ERP systems, time series on site, environmental data provided externally, or even in emails or on paper. A data fabric should be considered to ensure insights can be extracted from data no matter the environment it resides in.

- Automated action. Reduce the potential for human error or delay by automatically generating alerts and work orders for resource managers and service staff in the event of anomaly detection. Criticality measures should be adopted to help prioritise maintenance tasks and reduce alert noise.

As we return to the office, there is a growing reliance on devices to tell us how safe and secure the environment is for our return. And in specific application areas, such as Healthcare and Manufacturing, IoT data is critical for decision-making. In some sectors such as Health and Wellness, IoT devices collect personally identifiable information (PII). IoT technology is so critical to our current infrastructures that the physical wellbeing of both individuals and organisations can be at risk.

Trust & Data

IoT are also vulnerable to breaches if not properly secured. And with a significant increase in cybersecurity events over the last year, the reliance on data from IoT is driving the need for better data integrity. Security features such as data integrity and device authentication can be accomplished through the use of digital certificates and these features need to be designed as part of the device prior to manufacturing. Because if you cannot trust either the IoT devices and their data, there is no point in collecting, running analytics, and executing decisions based on the information collected.

We discuss the role of embedding digital certificates into the IoT device at manufacture to enable better security and ongoing management of the device.

Securing IoT Data from the Edge

So much of what is happening on networks in terms of real-time data collection happens at the Edge. But because of the vast array of IoT devices connecting at the Edge, there has not been a way of baking trust into the manufacture of the devices. With a push to get the devices to market, many manufacturers historically have bypassed efforts on security. Devices have been added on the network at different times from different sources.

There is a need to verify the IoT devices and secure them, making sure to have an audit trail on what you are connecting to and communicating with.

So from a product design perspective, this leads us to several questions:

- How do we ensure the integrity of data from devices if we cannot authenticate them?

- How do we ensure that the operational systems being automated are controlled as intended?

- How do we authenticate the device on the network making the data request?

Using a Public Key Infrastructure (PKI) approach maintains assurance, integrity and confidentiality of data streams. PKI has become an important way to secure IoT device applications, and this needs to be built into the design of the device. Device authentication is also an important component, in addition to securing data streams. With good design and a PKI management that is up to the task you should be able to proceed with confidence in the data created at the Edge.

Johnson Controls/DigiCert have designed a new way of managing PKI certification for IoT devices through their partnership and integration of the DigiCert ONE™ PKI management platform and the Johnson Controls OpenBlue IoT device platform. Based on an advanced, container-based design, DigiCert ONE allows organisations to implement robust PKI deployment and management in any environment, roll out new services and manage users and devices across your organisation at any scale no matter the stage of their lifecycle. This creates an operational synergy within the Operational Technology (OT) and IoT spaces to ensure that hardware, software and communication remains trusted throughout the lifecycle.

Rationale on the Role of Certification in IoT Management

Digital certificates ensure the integrity of data and device communications through encryption and authentication, ensuring that transmitted data are genuine and have not been altered or tampered with. With government regulations worldwide mandating secure transit (and storage) of PII data, PKI can help ensure compliance with the regulations by securing the communication channel between the device and the gateway.

Connected IoT devices interact with each other through machine to machine (M2M) communication. Each of these billions of interactions will require authentication of device credentials for the endpoints to prove the device’s digital identity. In such scenarios, an identity management approach based on passwords or passcodes is not practical, and PKI digital certificates are by far the best option for IoT credential management today.

Creating lifecycle management for connected devices, including revocation of expired certificates, is another example where PKI can help to secure IoT devices. Having a robust management platform that enables device management, revocation and renewal of certificates is a critical component of a successful PKI. IoT devices will also need regular patches and upgrades to their firmware, with code signing being critical to ensure the integrity of the downloaded firmware – another example of the close linkage between the IoT world and the PKI world.

Summary

PKI certification benefits both people and processes. PKI enables identity assurance while digital certificates validate the identity of the connected device. Use of PKI for IoT is a necessary trend for sense of trust in the network and for quality control of device management.

Identifying the IoT device is critical in managing its lifespan and recognizing its legitimacy in the network. Building in the ability for PKI at the device’s manufacture is critical to enable the device for its lifetime. By recognizing a device, information on it can be maintained in an inventory and its lifecycle and replacement can be better managed. Once a certificate has been distributed and certified, having the control of PKI systems creates life-cycle management.