AI has become a business necessity today, catalysing innovation, efficiency, and growth by transforming extensive data into actionable insights, automating tasks, improving decision-making, boosting productivity, and enabling the creation of new products and services.

Generative AI stole the limelight in 2023 given its remarkable advancements and potential to automate various cognitive processes. However, now the real opportunity lies in leveraging this increased focus and attention to shine the AI lens on all business processes and capabilities. As organisations grasp the potential for productivity enhancements, accelerated operations, improved customer outcomes, and enhanced business performance, investment in AI capabilities is expected to surge.

In this eBook, Ecosystm VP Research Tim Sheedy and Vinod Bijlani and Aman Deep from HPE APAC share their insights on why it is crucial to establish tailored AI capabilities within the organisation.

Over the past year, many organisations have explored Generative AI and LLMs, with some successfully identifying, piloting, and integrating suitable use cases. As business leaders push tech teams to implement additional use cases, the repercussions on their roles will become more pronounced. Embracing GenAI will require a mindset reorientation, and tech leaders will see substantial impact across various ‘traditional’ domains.

AIOps and GenAI Synergy: Shaping the Future of IT Operations

When discussing AIOps adoption, there are commonly two responses: “Show me what you’ve got” or “We already have a team of Data Scientists building models”. The former usually demonstrates executive sponsorship without a specific business case, resulting in a lukewarm response to many pre-built AIOps solutions due to their lack of a defined business problem. On the other hand, organisations with dedicated Data Scientist teams face a different challenge. While these teams can create impressive models, they often face pushback from the business as the solutions may not often address operational or business needs. The challenge arises from Data Scientists’ limited understanding of the data, hindering the development of use cases that effectively align with business needs.

The most effective approach lies in adopting an AIOps Framework. Incorporating GenAI into AIOps frameworks can enhance their effectiveness, enabling improved automation, intelligent decision-making, and streamlined operational processes within IT operations.

This allows active business involvement in defining and validating use-cases, while enabling Data Scientists to focus on model building. It bridges the gap between technical expertise and business requirements, ensuring AIOps initiatives are influenced by the capabilities of GenAI, address specific operational challenges and resonate with the organisation’s goals.

The Next Frontier of IT Infrastructure

Many companies adopting GenAI are openly evaluating public cloud-based solutions like ChatGPT or Microsoft Copilot against on-premises alternatives, grappling with the trade-offs between scalability and convenience versus control and data security.

Cloud-based GenAI offers easy access to computing resources without substantial upfront investments. However, companies face challenges in relinquishing control over training data, potentially leading to inaccurate results or “AI hallucinations,” and concerns about exposing confidential data. On-premises GenAI solutions provide greater control, customisation, and enhanced data security, ensuring data privacy, but require significant hardware investments due to unexpectedly high GPU demands during both the training and inferencing stages of AI models.

Hardware companies are focusing on innovating and enhancing their offerings to meet the increasing demands of GenAI. The evolution and availability of powerful and scalable GPU-centric hardware solutions are essential for organisations to effectively adopt on-premises deployments, enabling them to access the necessary computational resources to fully unleash the potential of GenAI. Collaboration between hardware development and AI innovation is crucial for maximising the benefits of GenAI and ensuring that the hardware infrastructure can adequately support the computational demands required for widespread adoption across diverse industries. Innovations in hardware architecture, such as neuromorphic computing and quantum computing, hold promise in addressing the complex computing requirements of advanced AI models.

The synchronisation between hardware innovation and GenAI demands will require technology leaders to re-skill themselves on what they have done for years – infrastructure management.

The Rise of Event-Driven Designs in IT Architecture

IT leaders traditionally relied on three-tier architectures – presentation for user interface, application for logic and processing, and data for storage. Despite their structured approach, these architectures often lacked scalability and real-time responsiveness. The advent of microservices, containerisation, and serverless computing facilitated event-driven designs, enabling dynamic responses to real-time events, and enhancing agility and scalability. Event-driven designs, are a paradigm shift away from traditional approaches, decoupling components and using events as a central communication mechanism. User actions, system notifications, or data updates trigger actions across distributed services, adding flexibility to the system.

However, adopting event-driven designs presents challenges, particularly in higher transaction-driven workloads where the speed of serverless function calls can significantly impact architectural design. While serverless computing offers scalability and flexibility, the latency introduced by initiating and executing serverless functions may pose challenges for systems that demand rapid, real-time responses. Increasing reliance on event-driven architectures underscores the need for advancements in hardware and compute power. Transitioning from legacy architectures can also be complex and may require a phased approach, with cultural shifts demanding adjustments and comprehensive training initiatives.

The shift to event-driven designs challenges IT Architects, whose traditional roles involved designing, planning, and overseeing complex systems. With Gen AI and automation enhancing design tasks, Architects will need to transition to more strategic and visionary roles. Gen AI showcases capabilities in pattern recognition, predictive analytics, and automated decision-making, promoting a symbiotic relationship with human expertise. This evolution doesn’t replace Architects but signifies a shift toward collaboration with AI-driven insights.

IT Architects need to evolve their skill set, blending technical expertise with strategic thinking and collaboration. This changing role will drive innovation, creating resilient, scalable, and responsive systems to meet the dynamic demands of the digital age.

Whether your organisation is evaluating or implementing GenAI, the need to upskill your tech team remains imperative. The evolution of AI technologies has disrupted the tech industry, impacting people in tech. Now is the opportune moment to acquire new skills and adapt tech roles to leverage the potential of GenAI rather than being disrupted by it.

At the end of last year, I had the privilege of attending a session organised by Red Hat where they shared their Asia Pacific roadmap with the tech analyst community. The company’s approach of providing a hybrid cloud application platform centred around OpenShift has worked well with clients who favour a hybrid cloud approach. Going forward, Red Hat is looking to build and expand their business around three product innovation focus areas. At the core is their platform engineering, flanked by focus areas on AI/ML and the Edge.

The Opportunities

Besides the product innovation focus, Red Hat is also looking into several emerging areas, where they’ve seen initial client success in 2023. While use cases such as operational resilience or edge lifecycle management are long-existing trends, carbon-aware workload scheduling may just have appeared over the horizon. But two others stood out for me with a potentially huge demand in 2024.

GPU-as-a-Service. GPUaaS addresses a massive demand driven by the meteoric rise of Generative AI over the past 12 months. Any innovation that would allow customers a more flexible use of scarce and expensive resources such as GPUs can create an immediate opportunity and Red Hat might have a first mover and established base advantage. Particularly GPUaaS is an opportunity in fast growing markets, where cost and availability are strong inhibitors.

Digital Sovereignty. Digital sovereignty has been a strong driver in some markets – for example in Indonesia, which has led to most cloud hyperscalers opening their data centres onshore over the past years. Yet not the least due to the geography of Indonesia, hybrid cloud remains an important consideration, where digital sovereignty needs to be managed across a diverse infrastructure. Other fast-growing markets have similar challenges and a strong drive for digital sovereignty. Crucially, Red Hat may well have an advantage where onshore hyperscalers are not yet available (for example in Malaysia).

Strategic Focus Areas for Red Hat

Red Hat’s product innovation strategy is robust at its core, particularly in platform engineering, but needs more clarity at the periphery. They have already been addressing Edge use cases as an extension of their core platform, especially in the Automotive sector, establishing a solid foundation in this area. Their focus on AI/ML may be a bit more aspirational, as they are looking to not only AI-enable their core platform but also expand it into a platform to run AI workloads. AI may drive interest in hybrid cloud, but it will be in very specific use cases.

For Red Hat to be successful in the AI space, it must steer away from competing straight out with the cloud-native AI platforms. They must identify the use cases where AI on hybrid cloud has a true advantage. Such use cases will mainly exist in industries with a strong Edge component, potentially also with a still heavy reliance on on-site data centres. Manufacturing is the prime example.

Red Hat’s success in AI/ML use cases is tightly connected to their (continuing) success in Edge use cases, all build on the solid platform engineering foundation.

While there has been much speculation about AI being a potential negative force on humanity, what we do know today is that the accelerated use of AI WILL mean an accelerated use of energy. And if that energy source is not renewable, AI will have a meaningful negative impact on CO2 emissions and will accelerate climate change. Even if the energy is renewable, GPUs and CPUs generate significant heat – and if that heat is not captured and used effectively then it too will have a negative impact on warming local environments near data centres.

Balancing Speed and Energy Efficiency

While GPUs use significantly more energy than CPUs, they run many AI algorithms faster than CPUs – so use less energy overall. But the process needs to run – and these are additional processes. Data needs to be discovered, moved, stored, analysed, cleansed. In many cases, algorithms need to be recreated, tweaked and improved. And then that algorithm itself will kick off new digital processes that are often more processor and energy-intensive – as now organisations might have a unique process for every customer or many customer groups, requiring more decisioning and hence more digitally intensive.

The GPUs, servers, storage, cabling, cooling systems, racks, and buildings have to be constructed – often built from raw materials – and these raw materials need to be mined, transported and transformed. With the use of AI exploding at the moment, so is the demand for AI infrastructure – all of which has an impact on the resources of the planet and ultimately on climate change.

Sustainable Sourcing

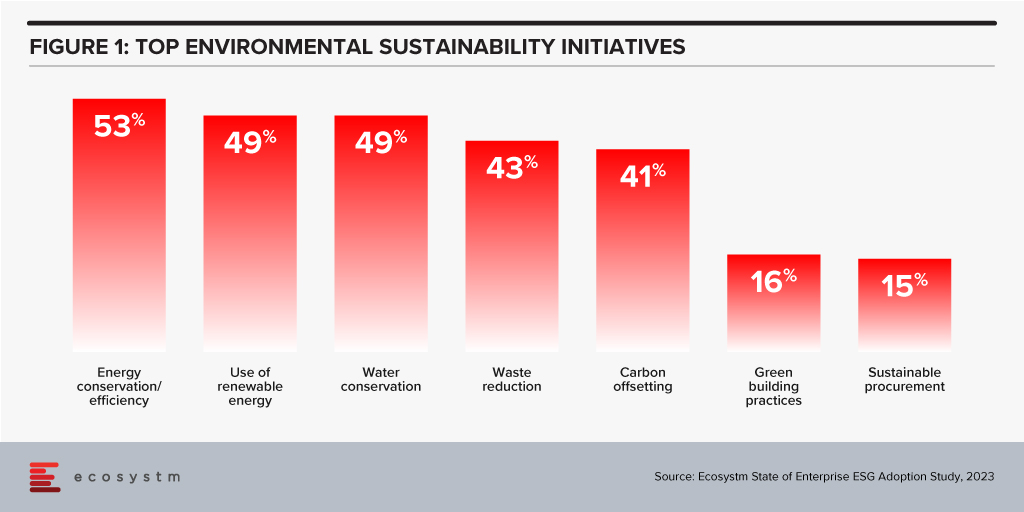

Some organisations understand this already and are beginning to use sustainable sourcing for their technology services. However, it is not a top priority with Ecosystm research showing only 15% of organisations focus on sustainable procurement.

Technology Providers Can Help

Leading technology providers are introducing initiatives that make it easier for organisations to procure sustainable IT solutions. The recently announced HPE GreenLake for Large Language Models will be based in a data centre built and run by Qscale in Canada that is not only sustainably built and sourced, but sits on a grid supplying 99.5% renewable electricity – and waste (warm) air from the data centre and cooling systems is funneled to nearby greenhouses that grow berries. I find the concept remarkable and this is one of the most impressive sustainable data centre stories to date.

The focus on sustainability needs to be universal – across all cloud and AI providers. AI usage IS exploding – and we are just at the tip of the iceberg today. It will continue to grow as it becomes easier to use and deploy, more readily available, and more relevant across all industries and organisations. But we are at a stage of climate warming where we cannot increase our greenhouse gas emissions – and offsetting these emissions just passes the buck.

We need more companies like HPE and Qscale to build this Sustainable Future – and we need to be thinking the same way in our own data centres and putting pressure on our own AI and overall technology value chain to think more sustainably and act in the interests of the planet and future generations. Cloud providers – like AWS – are committed to the NetZero goal (by 2040 in their case) – but this is meaningless if our requirement for computing capacity increases a hundred-fold in that period. Our businesses and our tech partners need to act today. It is time for organisations to demand it from their tech providers to influence change in the industry.

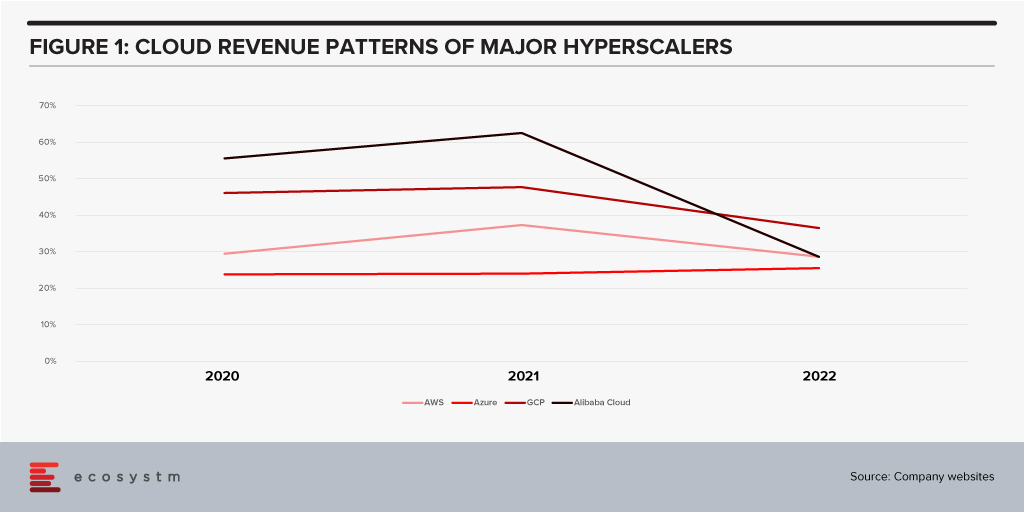

All growth must end eventually. But it is a brave person who will predict the end of growth for the public cloud hyperscalers. The hyperscaler cloud revenues have been growing at between 25-60% the past few years (off very different bases – and often including and counting different revenue streams). Even the current softening of economic spend we are seeing across many economies is only causing a slight slowdown.

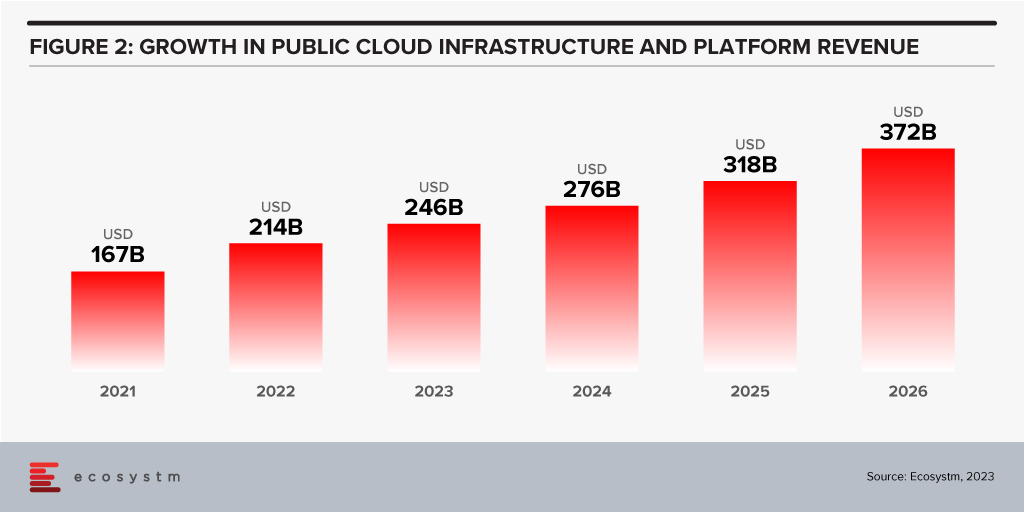

Looking forward, we expect growth in public cloud infrastructure and platform spend to continue to decline in 2024, but to accelerate in 2025 and 2026 as businesses take advantage of new cloud services and capabilities. However, the sheer size of the market means that we will see slower growth going forward – but we forecast 2026 to see the highest revenue growth of any year since public cloud services were founded.

The factors driving this growth include:

- Acceleration of digital intensity. As countries come out of their economic slowdowns and economic activity increases, so too will digital activity. And greater volumes of digital activity will require an increase in the capacity of cloud environments on which the applications and processes are hosted.

- Increased use of AI services. Businesses and AI service providers will need access to GPUs – and eventually, specialised AI chipsets – which will see cloud bills increase significantly. The extra data storage to drive the algorithms – and the increase in CPU required to deliver customised or personalised experiences that these algorithms will direct will also drive increased cloud usage.

- Further movement of applications from on-premises to cloud. Many organisations – particularly those in the Asia Pacific region – still have the majority of their applications and tech systems sitting in data centre environments. Over the next few years, more of these applications will move to hyperscalers.

- Edge applications moving to the cloud. As the public cloud giants improve their edge computing capabilities – in partnership with hardware providers, telcos, and a broader expansion of their own networks – there will be greater opportunity to move edge applications to public cloud environments.

- Increasing number of ISVs hosting on these platforms. The move from on-premise to cloud will drive some growth in hyperscaler revenues and activities – but the ISVs born in the cloud will also drive significant growth. SaaS and PaaS are typically seeing growth above the rates of IaaS – but are also drivers of the growth of cloud infrastructure services.

- Improving cloud marketplaces. Continuing on the topic of ISV partners, as the cloud hyperscalers make it easier and faster to find, buy, and integrate new services from their cloud marketplace, the adoption of cloud infrastructure services will continue to grow.

- New cloud services. No one has a crystal ball, and few people know what is being developed by Microsoft, AWS, Google, and the other cloud providers. New services will exist in the next few years that aren’t even being considered today. Perhaps Quantum Computing will start to see real business adoption? But these new services will help to drive growth – even if “legacy” cloud service adoption slows down or services are retired.

Hybrid Cloud Will Play an Important Role for Many Businesses

Growth in hyperscalers doesn’t mean that the hybrid cloud will disappear. Many organisations will hit a natural “ceiling” for their public cloud services. Regulations, proximity, cost, volumes of data, and “gravity” will see some applications remain in data centres. However, businesses will want to manage, secure, transform, and modernise these applications at the same rate and use the same tools as their public cloud environments. Therefore, hybrid and private cloud will remain important elements of the overall cloud market. Their success will be the ability to integrate with and support public cloud environments.

The future of cloud is big – but like all infrastructure and platforms, they are not a goal in themselves. It is what cloud is and will further enable businesses and customers which is exciting. As the rates of digitisation and digital intensity increase, the opportunities for the cloud infrastructure and platform providers will blossom. Sometimes they will be the driver of the growth, and other times they will just be supporting actors. But either way, in 2026 – 20 years after the birth of AWS – the growth in cloud services will be bigger than ever.

Last week, NVIDIA announced that it had agreed to acquire UK-based chip company Arm from Japanese conglomerate SoftBank in a deal estimated to be worth USD 40 billion. In 2016, SoftBank had acquired Arm for USD 32 billion. The deal is set to unite two major chip companies; power data centres and mobile devices for the age of AI and high-performance computing; and accelerate innovation in the enterprise and consumer market.

Rationale for the Deal

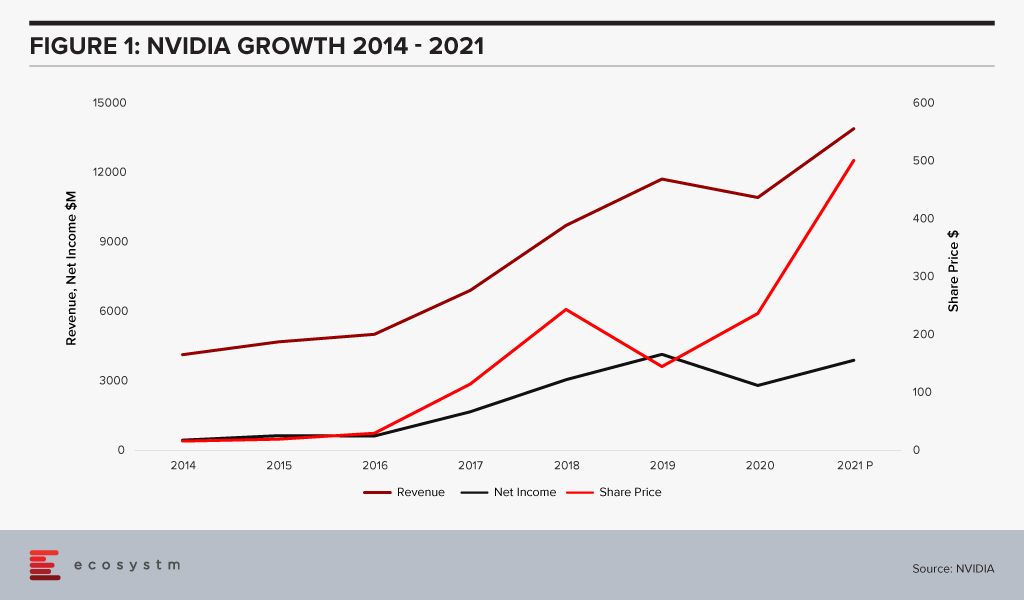

NVIDIA has long been the industry leader in graphics chips (GPUs), and a smaller but significantly profitable player in the chip stakes. With graphic processing being a key component in AI applications like facial recognition, NVIDIA was quick to capitalise. This allowed it to move into data centres – an area long dominated by Intel who still holds the lion’s share of this market. NVIDIA’s data centre business has grown tremendously – from near zero less than ten years ago to nearly USD 3 billion in the first two quarters of this fiscal year. It contributes 42% of the company’s total sales.

The gaming PC market has been the fastest-growing segment in the PC market. The rare shining light in an otherwise stagnant-to-slightly declining market. NVIDIA has benefited greatly from this with a huge jump in their graphics revenues. Its GeForce brand is one of the most desired in the industry. However, with their success in AI, NVIDIA’s ambition has now grown well beyond the graphics market. Last year NVIDIA acquired Mellanox – who makes specialised networking products especially in the area of high-performance computing, data centres, cloud computing – for almost USD 7 billion. There is clearly a desire to expand the company’s footprint and position itself as a broad-based player in the data centre and cloud space focused on AI computing needs.

The acquisition of Arm though adds a whole new dimension. Arm is the leading technology provider in the mobile chip market. A staggering 90% of smartphones are estimated to use Arm technology. Arm is the colossus of the small chip industry – having crossed 20 billion in unit shipments in 2019.

Acquiring Arm is likely to result in NVIDIA now having a play in the effervescent smartphone market. But the company is possibly eyeing a different prize. Jensen Huang, Founder and CEO of NVIDIA said “AI is the most powerful technology force of our time and has launched a new wave of computing. In the years ahead, trillions of computers running AI will create a new internet-of-things that is thousands of times larger than today’s internet-of-people. Our combination will create a company fabulously positioned for the age of AI.”

With thoughts of self-driving cars, connected homes, smartphones, IoT, edge computing – all seamlessly working with each other, the acquisition of Arm provides NVIDIA a unique position in this market. As the number of connected devices explodes, as many billions of sensors become an ubiquitous part of 21st century living, there is going to be a huge demand for low power processing everywhere. Having that market may turn out to be a larger prize than the smartphone market. The possibilities are endless.

While this deal is supposed to be worth around USD 40 billion, somewhere between USD 23-28 billion is going to be paid in the form of NVIDIA stock. This brings us to an extremely interesting dynamic. At the beginning of 2016 NVIDIA’s market cap was less than USD 20 billion. Mighty Intel was at USD 150 billion. AMD the other player in the market for chips who also sell graphics was at a mere USD 2 billion. In July this year, NVIDIA’s value passed Intel’s and today it is sitting at around USD 300 billion! Intel with a recent dip is now close to USD 200 billion. AMD too with all the tech-fueled growth in recent years has grown to just shy of USD 100 billion market cap.

What this tells us is that the stock portion of the deal is cheaper for NVIDIA today by around 55% compared to if this deal was consummated on 1st January 2020. If there was a right time for NVIDIA to buy – it is now. This also shows the way the company has grown revenue at a massive clip powered by Gaming PCs and AI. The deal to buy Arm appears to be a very good idea, which would establish NVIDIA as a leader in the chip industry moving forward.

Ecosystm Comments

While there appears to be some good reasons for this deal and there are some very exciting possibilities for both NVIDIA and Arm, there are some challenges.

The tech industry is littered with examples of large mergers and splits that did not pan out. Given that this is a large deal between two businesses without a large overlap, this partnership needs to be handled with a great deal of care and thought. The right people need to be retained. Customer trust needs to be retained.

Arm so far has been successful as a neutral provider of IP and design. It does not make chips, far less any downstream products. It therefore does not compete with any of the vendors licensing its technology. NVIDIA competes with Arm’s customers. The deal might create significant misgivings in the minds of many customers about sharing of information like roadmaps and pricing. Both companies have been making repeated statements that they will ensure separation of the businesses to avoid conflicts.

However, it might prove to be difficult for NVIDIA and Arm to do the delicate dance of staying at arm’s length (pun intended) while at the same time obtaining synergies. Collaborating on technology development might prove to be difficult as well, if customer roadmaps cannot be discussed.

Business today also cannot escape the gravitational force of geo-politics. Given the current US-China spat, the Chinese media and various other agencies are already opposing this deal. Chinese companies are going to be very wary of using Arm technology if there is a chance the tap can be suddenly shut down by the US government. China accounts for about 25% of Arm’s market in units. One of the unintended consequences which could emerge from this is the empowerment of a new competitor in this space.

NVIDIA and Arm will need to take a very strategic long-term view, get communication out well ahead of the market and reassure their customers, ensuring they retain their trust. If they manage this well then they can reap huge benefits from their merger.