Over the past year, many organisations have explored Generative AI and LLMs, with some successfully identifying, piloting, and integrating suitable use cases. As business leaders push tech teams to implement additional use cases, the repercussions on their roles will become more pronounced. Embracing GenAI will require a mindset reorientation, and tech leaders will see substantial impact across various ‘traditional’ domains.

AIOps and GenAI Synergy: Shaping the Future of IT Operations

When discussing AIOps adoption, there are commonly two responses: “Show me what you’ve got” or “We already have a team of Data Scientists building models”. The former usually demonstrates executive sponsorship without a specific business case, resulting in a lukewarm response to many pre-built AIOps solutions due to their lack of a defined business problem. On the other hand, organisations with dedicated Data Scientist teams face a different challenge. While these teams can create impressive models, they often face pushback from the business as the solutions may not often address operational or business needs. The challenge arises from Data Scientists’ limited understanding of the data, hindering the development of use cases that effectively align with business needs.

The most effective approach lies in adopting an AIOps Framework. Incorporating GenAI into AIOps frameworks can enhance their effectiveness, enabling improved automation, intelligent decision-making, and streamlined operational processes within IT operations.

This allows active business involvement in defining and validating use-cases, while enabling Data Scientists to focus on model building. It bridges the gap between technical expertise and business requirements, ensuring AIOps initiatives are influenced by the capabilities of GenAI, address specific operational challenges and resonate with the organisation’s goals.

The Next Frontier of IT Infrastructure

Many companies adopting GenAI are openly evaluating public cloud-based solutions like ChatGPT or Microsoft Copilot against on-premises alternatives, grappling with the trade-offs between scalability and convenience versus control and data security.

Cloud-based GenAI offers easy access to computing resources without substantial upfront investments. However, companies face challenges in relinquishing control over training data, potentially leading to inaccurate results or “AI hallucinations,” and concerns about exposing confidential data. On-premises GenAI solutions provide greater control, customisation, and enhanced data security, ensuring data privacy, but require significant hardware investments due to unexpectedly high GPU demands during both the training and inferencing stages of AI models.

Hardware companies are focusing on innovating and enhancing their offerings to meet the increasing demands of GenAI. The evolution and availability of powerful and scalable GPU-centric hardware solutions are essential for organisations to effectively adopt on-premises deployments, enabling them to access the necessary computational resources to fully unleash the potential of GenAI. Collaboration between hardware development and AI innovation is crucial for maximising the benefits of GenAI and ensuring that the hardware infrastructure can adequately support the computational demands required for widespread adoption across diverse industries. Innovations in hardware architecture, such as neuromorphic computing and quantum computing, hold promise in addressing the complex computing requirements of advanced AI models.

The synchronisation between hardware innovation and GenAI demands will require technology leaders to re-skill themselves on what they have done for years – infrastructure management.

The Rise of Event-Driven Designs in IT Architecture

IT leaders traditionally relied on three-tier architectures – presentation for user interface, application for logic and processing, and data for storage. Despite their structured approach, these architectures often lacked scalability and real-time responsiveness. The advent of microservices, containerisation, and serverless computing facilitated event-driven designs, enabling dynamic responses to real-time events, and enhancing agility and scalability. Event-driven designs, are a paradigm shift away from traditional approaches, decoupling components and using events as a central communication mechanism. User actions, system notifications, or data updates trigger actions across distributed services, adding flexibility to the system.

However, adopting event-driven designs presents challenges, particularly in higher transaction-driven workloads where the speed of serverless function calls can significantly impact architectural design. While serverless computing offers scalability and flexibility, the latency introduced by initiating and executing serverless functions may pose challenges for systems that demand rapid, real-time responses. Increasing reliance on event-driven architectures underscores the need for advancements in hardware and compute power. Transitioning from legacy architectures can also be complex and may require a phased approach, with cultural shifts demanding adjustments and comprehensive training initiatives.

The shift to event-driven designs challenges IT Architects, whose traditional roles involved designing, planning, and overseeing complex systems. With Gen AI and automation enhancing design tasks, Architects will need to transition to more strategic and visionary roles. Gen AI showcases capabilities in pattern recognition, predictive analytics, and automated decision-making, promoting a symbiotic relationship with human expertise. This evolution doesn’t replace Architects but signifies a shift toward collaboration with AI-driven insights.

IT Architects need to evolve their skill set, blending technical expertise with strategic thinking and collaboration. This changing role will drive innovation, creating resilient, scalable, and responsive systems to meet the dynamic demands of the digital age.

Whether your organisation is evaluating or implementing GenAI, the need to upskill your tech team remains imperative. The evolution of AI technologies has disrupted the tech industry, impacting people in tech. Now is the opportune moment to acquire new skills and adapt tech roles to leverage the potential of GenAI rather than being disrupted by it.

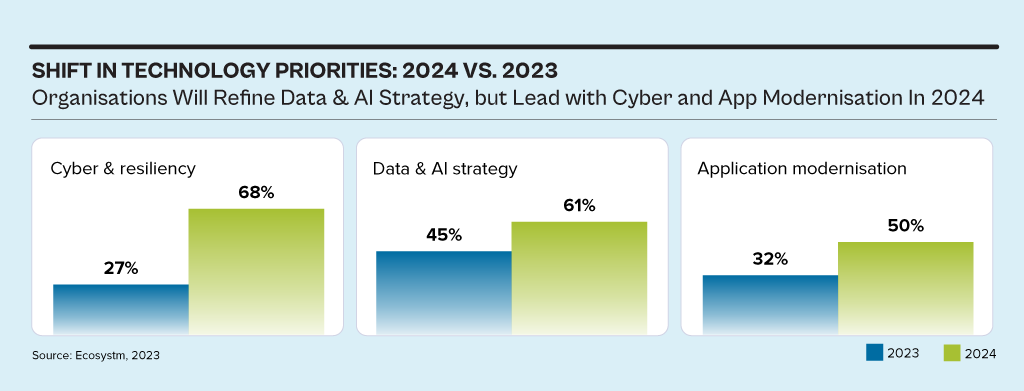

While the discussions have centred around AI, particularly Generative AI in 2023, the influence of AI innovations is extensive. Organisations will urgently need to re-examine their risk strategies, particularly in cyber and resilience practices. They will also reassess their infrastructure needs, optimise applications for AI, and re-evaluate their skills requirements.

This impacts the entire tech market, including tech skills, market opportunities, and innovations.

Ecosystm analysts Alea Fairchild, Darian Bird, Richard Wilkins, and Tim Sheedy present the top 5 trends in building an Agile & Resilient Organisation in 2024.

Click here to download ‘Ecosystm Predicts: Top 5 Resilience Trends in 2024’ as a PDF.

#1 Gen AI Will See Spike in Infrastructure Innovation

Enterprises considering the adoption of Generative AI are evaluating cloud-based solutions versus on-premises solutions. Cloud-based options present an advantage in terms of simplified integration, but raise concerns over the management of training data, potentially resulting in AI-generated hallucinations. On-premises alternatives offer enhanced control and data security but encounter obstacles due to the unexpectedly high demands of GPU computing needed for inferencing, impeding widespread implementation. To overcome this, there’s a need for hardware innovation to meet Generative AI demands, ensuring scalable on-premises deployments.

The collaboration between hardware development and AI innovation is crucial to unleash the full potential of Generative AI and drive enterprise adoption in the AI ecosystem.

Striking the right balance between cloud-based flexibility and on-premises control is pivotal, with considerations like data control, privacy, scalability, compliance, and operational requirements.

#2 Cloud Migrations Will Make Way for Cloud Transformations

The steady move to the public cloud has slowed down. Organisations – particularly those in mature economies – now prioritise cloud efficiencies, having largely completed most of their application migration. The “easy” workloads have moved to the cloud – either through lift-and-shift, SaaS, or simple replatforming.

New skills will be needed as organisations adopt public and hybrid cloud for their entire application and workload portfolio.

- Cloud-native development frameworks like Spring Boot and ASP.NET Core make it easier to develop cloud-native applications

- Cloud-native databases like MongoDB and Cassandra are designed for the cloud and offer scalability, performance, and reliability

- Cloud-native storage like Snowflake, Amazon S3 and Google Cloud Storage provides secure and scalable storage

- Cloud-native messaging like Amazon SNS and Google Cloud Pub/Sub provide reliable and scalable communication between different parts of the cloud-native application

#3 2024 Will be a Good Year for Technology Services Providers

Several changes are set to fuel the growth of tech services providers (systems integrators, consultants, and managed services providers).

There will be a return of “big apps” projects in 2024.

Companies are embarking on significant updates for their SAP, Oracle, and other large ERP, CRM, SCM, and HRM platforms. Whether moving to the cloud or staying on-premises, these upgrades will generate substantial activity for tech services providers.

The migration of complex apps to the cloud involves significant refactoring and rearchitecting, presenting substantial opportunities for managed services providers to transform and modernise these applications beyond traditional “lift-and-shift” activities.

The dynamic tech landscape, marked by AI growth, evolving security threats, and constant releases of new cloud services, has led to a shortage of modern tech skills. Despite a more relaxed job market, organisations will increasingly turn to their tech services partners, whether onshore or offshore, to fill crucial skill gaps.

#4 Gen AI and Maturing Deepfakes Will Democratise Phishing

As with any emerging technology, malicious actors will be among the fastest to exploit Generative AI for their own purposes. The most immediate application will be employing widely available LLMs to generate convincing text and images for their phishing schemes. For many potential victims, misspellings and strangely worded appeals are the only hints that an email from their bank, courier, or colleague is not what it seems. The ability to create professional-sounding prose in any language and a variety of tones will unfortunately democratise phishing.

The emergence of Generative AI combined with the maturing of deepfake technology will make it possible for malicious agents to create personalised voice and video attacks. Digital channels for communication and entertainment will be stretched to differentiate between real and fake.

Security training that underscores the threat of more polished and personalised phishing is a must.

#5 A Holistic Approach to Risk and Operational Resilience Will Drive Adoption of VMaaS

Vulnerability management is a continuous, proactive approach to managing system security. It not only involves vulnerability assessments but also includes developing and implementing strategies to address these vulnerabilities. This is where Vulnerability Management Platforms (VMPs) become table stakes for small and medium enterprises (SMEs) as they are often perceived as “easier targets” by cybercriminals due to potentially lesser investments in security measures.

Vulnerability Management as a Service (VMaaS) – a third-party service that manages and controls threats to automate vulnerability response to remediate faster – can improve the asset cybersecurity management and let SMEs focus on their core activities.

In-house security teams will particularly value the flexibility and customisation of dashboards and reports that give them enhanced visibility over all assets and vulnerabilities.

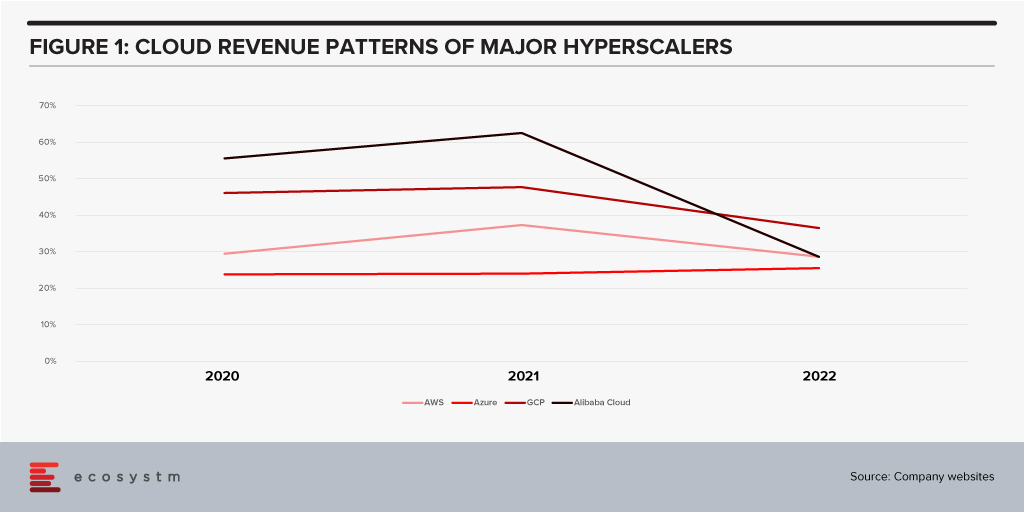

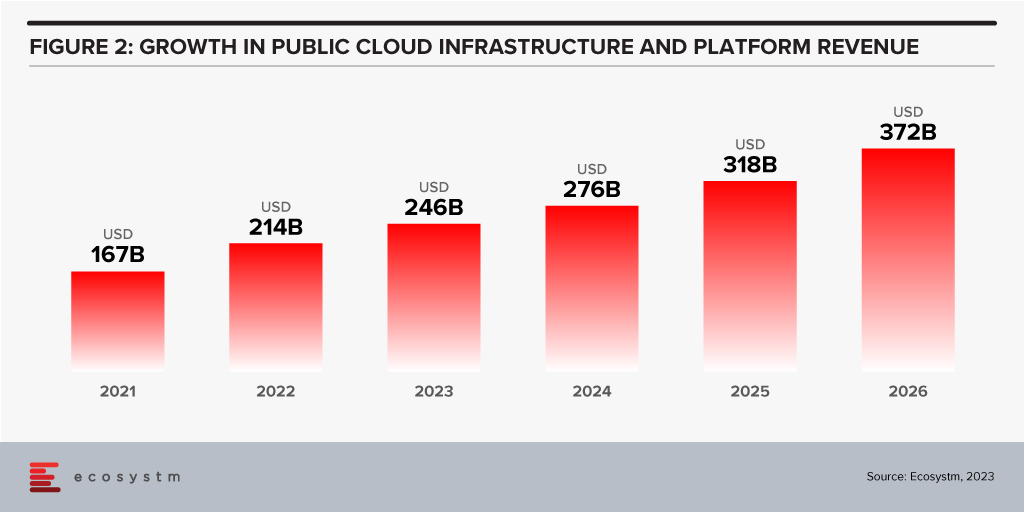

All growth must end eventually. But it is a brave person who will predict the end of growth for the public cloud hyperscalers. The hyperscaler cloud revenues have been growing at between 25-60% the past few years (off very different bases – and often including and counting different revenue streams). Even the current softening of economic spend we are seeing across many economies is only causing a slight slowdown.

Looking forward, we expect growth in public cloud infrastructure and platform spend to continue to decline in 2024, but to accelerate in 2025 and 2026 as businesses take advantage of new cloud services and capabilities. However, the sheer size of the market means that we will see slower growth going forward – but we forecast 2026 to see the highest revenue growth of any year since public cloud services were founded.

The factors driving this growth include:

- Acceleration of digital intensity. As countries come out of their economic slowdowns and economic activity increases, so too will digital activity. And greater volumes of digital activity will require an increase in the capacity of cloud environments on which the applications and processes are hosted.

- Increased use of AI services. Businesses and AI service providers will need access to GPUs – and eventually, specialised AI chipsets – which will see cloud bills increase significantly. The extra data storage to drive the algorithms – and the increase in CPU required to deliver customised or personalised experiences that these algorithms will direct will also drive increased cloud usage.

- Further movement of applications from on-premises to cloud. Many organisations – particularly those in the Asia Pacific region – still have the majority of their applications and tech systems sitting in data centre environments. Over the next few years, more of these applications will move to hyperscalers.

- Edge applications moving to the cloud. As the public cloud giants improve their edge computing capabilities – in partnership with hardware providers, telcos, and a broader expansion of their own networks – there will be greater opportunity to move edge applications to public cloud environments.

- Increasing number of ISVs hosting on these platforms. The move from on-premise to cloud will drive some growth in hyperscaler revenues and activities – but the ISVs born in the cloud will also drive significant growth. SaaS and PaaS are typically seeing growth above the rates of IaaS – but are also drivers of the growth of cloud infrastructure services.

- Improving cloud marketplaces. Continuing on the topic of ISV partners, as the cloud hyperscalers make it easier and faster to find, buy, and integrate new services from their cloud marketplace, the adoption of cloud infrastructure services will continue to grow.

- New cloud services. No one has a crystal ball, and few people know what is being developed by Microsoft, AWS, Google, and the other cloud providers. New services will exist in the next few years that aren’t even being considered today. Perhaps Quantum Computing will start to see real business adoption? But these new services will help to drive growth – even if “legacy” cloud service adoption slows down or services are retired.

Hybrid Cloud Will Play an Important Role for Many Businesses

Growth in hyperscalers doesn’t mean that the hybrid cloud will disappear. Many organisations will hit a natural “ceiling” for their public cloud services. Regulations, proximity, cost, volumes of data, and “gravity” will see some applications remain in data centres. However, businesses will want to manage, secure, transform, and modernise these applications at the same rate and use the same tools as their public cloud environments. Therefore, hybrid and private cloud will remain important elements of the overall cloud market. Their success will be the ability to integrate with and support public cloud environments.

The future of cloud is big – but like all infrastructure and platforms, they are not a goal in themselves. It is what cloud is and will further enable businesses and customers which is exciting. As the rates of digitisation and digital intensity increase, the opportunities for the cloud infrastructure and platform providers will blossom. Sometimes they will be the driver of the growth, and other times they will just be supporting actors. But either way, in 2026 – 20 years after the birth of AWS – the growth in cloud services will be bigger than ever.

We can safely bet that in the decade taking us to 2030, technology will continue to impact and shape our world. Ecosystm has already predicted the top technology trends for 2020 (AI, Cloud, Cybersecurity, IoT). It is time to look beyond the year and see what 2030 has in store for us. There is a distinct possibility that there will be newer technologies in future that we are yet to hear of, waiting at the threshold to disrupt the existing tech space. Also by 2030, there will be many technologies that are considered futuristic today that will have become mainstream. Let us explore some of them.

Computing Technology

Quantum computing has been promising to take computing beyond current limits – going beyond bits to qubits. Qubits represent atoms, ions, photons or electrons and their respective control devices that are working together to act as computer memory and processor. It has the potential to process exponentially more data than current computers.

Even with various technology providers such as Google, IBM, AWS and Microsoft having announced their quantum computing innovations, it does not appear to be nearly enough to disrupt computing yet. The race to demonstrate the first usable quantum computer is on and start-ups such as Rigetti are challenging the established players. Whether or not the vision we have of quantum computers is fully realised, it will definitely bring in a new breed of computers with advanced processors capable of handling data, million times faster than today’s supercomputers.

Technology providers and government research organisations will continue the R&D initiatives to meet quantum’s real-world potential. Over the next decade, quantum computing applications are set to impact scientific research and exploration, including space exploration and materials science. Quantum computing will also impact us in our everyday lives enabling better weather forecasting and personalised healthcare.

Storage Technologies

Cloud storage has dominated most of the previous decade, enabling real-time access to data for both consumers and enterprises. The current storage technologies are reaching the verge of their theoretical storage capacity, given the amount of data we generate. Researchers continue to push the envelope when it comes to capacity, performance, and the reduction of the physical size of storage media. Some storage technologies of the future that have the potential to become mainstream are:

DNA Data storage

DNA stores the ‘data’ for every living organism and one day it could be the ultimate hard drive! DNA data storage consists of long chains of the nucleotides A, T, C and G – which are the building blocks of our DNA chains. DNA storage and computing systems use liquids to move molecules around, unlike electrons in silicon-based systems. This enables the stored data to be more secure and potentially allows an almost infinite storage lifespan. Researchers from Microsoft and the University of Washington have demonstrated the first fully automated system to store and retrieve data in manufactured DNA – a key step in taking the technology out of the realms of theory and into reality. The technology is still too expensive and requires new engineering solutions for it to be viable in the near future. However, there are several research organisations and start-ups out there working on creating synthetic DNA – data storage is seen as one of the many applications of the technology.

Glass Data storage

5D Glass Discs are being researched and experts say that they could store 3000x more data than CDs with a span of 13.8 billion years. The discs are made out of nanostructured glass, and the data is stored and retrieved using femtosecond laser writing. The discs are made by a laser that makes microscopic etchings in nano glass. The University of Southampton has developed the highest storage efficiency in a data storage device to date. Microsoft’s Project Silica has developed a proof of concept for its quartz glass storage in partnership with Warner Bros, storing the 1978 classic movie, Superman on a palm-size glass disc.

Nanotechnology

Nanotechnology is the science of understanding and manipulating materials on a nanometre scale. It has the potential to create the ultimate IoT sensors. Nanotechnology is already being used in the production of some microprocessors, batteries, computer displays, paints and cosmetics. But this really is just the beginning of a nano revolution.

The most researched nanotechnology applications are probably in medical science. By injecting or implanting tiny sensors, detailed information can be gathered about the human body. Innovations in implantable nanotechnology will grow and we will see more applications and innovations such as Smart Dust – a string of smart computers each smaller than a grain of sand. They can arrange themselves in nests in specified body locations for diagnostics or cure. The focus is not only on early detection but also on storing encrypted patient information. MIT is working on a nanotechnology application which involves nanoparticles or tiny robots powered by magnetic fields for targeted drug delivery.

Healthcare is not the only industry where nanotechnology will become mainstream. Materials science will see huge adoption and development of nanotechnology applications, this will drive innovation even in wearable devices and smart clothing. Nanomaterials will be used for monitoring in manufacturing, power plants, aircrafts and so on.

Printing Technologies

Astonishingly, it has been nearly 40 years since 3D printing technology was conceptualised. In the last few years, there has been rapid adoption in prototyping using 3D printers in manufacturing and construction. There has also been considerable research applications such as printing food and replacement organs. For example, companies have already demonstrated that they can create 3D prototypes of human liver tissue patches and implant them in mice.

4D printing will be the next step. With 4D printing, we can change shapes of 3D-printed objects upon stimuli from specific environmental conditions, such as temperature, light or humidity. Although the 4D printing technology is still in the R&D phase, this technology of the future has huge implications in the applications of engineering, manufacturing and construction. For instance, 4D printed bricks could change shape and size when placed alongside other bricks on the wall giving us better insights on how to protect property from natural disasters.

Connectivity Technologies

The world is becoming increasingly connected. Access to fast and reliable Internet is one of the many reasons for economic and social progress. There is constant R&D on a more seamless connectivity option. Industry giants are coming up with solutions to connect people in remote areas – such as providing emergency connectivity through helium balloons. Facebook’s Internet.org intends to provide universal connectivity using drones, low-Earth orbit and geosynchronous satellites. It is easy to predict that in 2030 everyone will have connectivity. While we are discussing the full impact of 5G, China’s Ministry of Science and Technology has already announced that they are embarking on R&D on 6G – more suited to a world where IoT becomes mainstream.

Li-Fi Technology

Li-Fi technology (Light Fidelity) works on visible light communications which sends data at a much higher speed. As we look for lower latency and higher speed, Li-Fi may very well be the technology that becomes mainstream in 2030. It is a wireless optical networking technology that uses LEDs for data transmission. In the lab, Li-Fi applications have reached transfer speeds of 10 Gbps, faster than the Wi-Fi 6 router. Li-Fi is being pegged as the network technology that will finally enable the true Edge. The recent Aircraft Interiors Expo (AIX) showcased a Li-Fi application that supported 40 Mbps transmissions and streamed HD content from a visible passenger service unit (PSU).

Ecosystm will continue to scan the horizon for newer technologies that can potentially disrupt the already disruptive tech world.

What do you think are the technologies of the future that will shape our lives in this decade? Tell us in your comments.