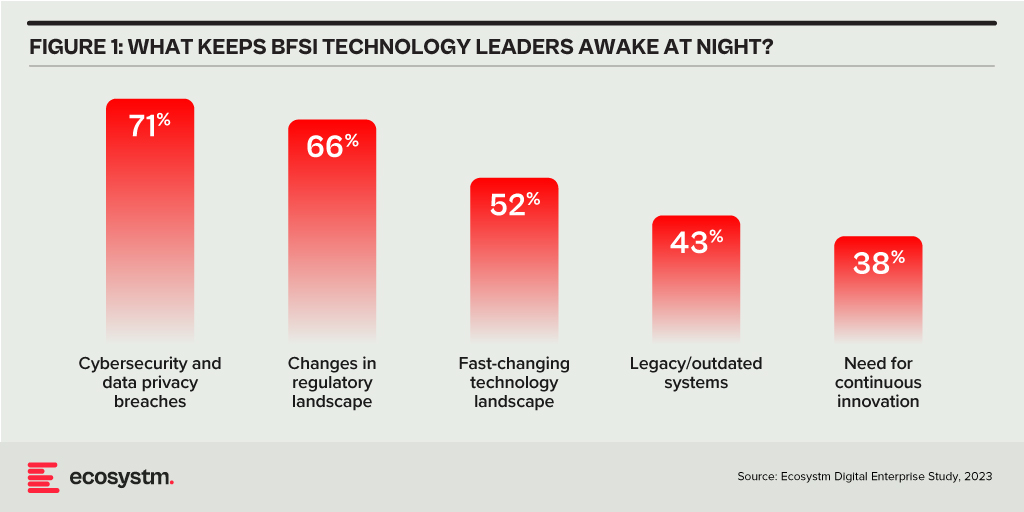

Ecosystm research reveals a stark reality: 75% of technology leaders in Financial Services anticipate data breaches.

Given the sector’s regulatory environment, data breaches carry substantial financial implications, emphasising the critical importance of giving precedence to cybersecurity. This is compelling a fresh cyber strategy focused on early threat detection and reduction of attack impact.

Read on to find out how tech leaders are building a culture of cyber-resilience, re-evaluating their cyber policies, and adopting technologies that keep them one step ahead of their adversaries.

Download ‘Cyber-Resilience in Finance: People, Policy & Technology’ as a PDF

Trust in the Banking, Financial Services, and Insurance (BFSI) industry is critical – and this amplifies the value of stolen data and fuels the motivation of malicious actors. Ransomware attacks continue to escalate, underscoring the need for fortified backup, encryption, and intrusion prevention systems. Similarly, phishing schemes have become increasingly sophisticated, placing a burden on BFSI cyber teams to educate employees, inform customers, deploy multifactor authentication, and implement fraud detection systems. While BFSI organisations work to fortify their defences, intruders continually find new avenues for profit – cyber protection is a high-stakes game of technological cat and mouse!

Some of these challenges inherent to the industry include the rise of cryptojacking – the unauthorised use of a BFSI company’s extensive computational resources for cryptocurrency mining.

Building Trust Amidst Expanding Threat Landscape

BFSI organisations face increasing complexity in their IT landscapes. Amidst initiatives like robo-advisory, point-of-sale lending, and personalised engagements – often facilitated by cloud-based fintech providers – they encounter new intricacies. As guest access extends to bank branches and IoT devices proliferate in public settings, vulnerabilities can emerge unexpectedly. Threats may arise from diverse origins, including misconfigured ATMs, unattended security cameras, or even asset trackers. Ensuring security and maintaining customer trust requires BFSI organisations to deploy automated and intelligent security systems to respond to emerging new threats.

Ecosystm research finds that nearly 70% of BFSI organisations have the intention of adopting AI and automation for security operations, over the next two years. But the reality is that adoption is still fairly nascent. Their top cyber focus areas remain data security, risk and compliance management, and application security.

Addressing Alert Fatigue and Control Challenges

According to Ecosystm research, 50% of BFSI organisations use more than 50 security tools to secure their infrastructure – and these are only the known tools. Cyber leaders are not only challenged with finding, assessing, and deploying the right tools, they are also challenged with managing them. Management challenges include a lack of centralised control across assets and applications and handling a high volume of security events and false positives.

Software updates and patches within the IT environment are crucial for security operations to identify and address potential vulnerabilities. Management of the IT environment should be paired with greater automation – event correlation, patching, and access management can all be improved through reduced manual processes.

Security operations teams must contend with the thousands of alerts that they receive each day. As a result, security analysts suffer from alert fatigue and struggle to recognise critical issues and novel threats. There is an urgency to deploy solutions that can help to reduce noise. For many organisations, an AI-augmented security team could de-prioritise 90% of alerts and focus on genuine risks.

Taken a step further, tools like AIOps can not only prioritise alerts but also respond to them. Directing issues to the appropriate people, recommending actions that can be taken by operators directly in a collaboration tool, and rules-based workflows performed automatically are already possible. Additionally, by evaluating past failures and successes, AIOps can learn over time which events are likely to become critical and how to respond to them. This brings us closer to the dream of NoOps, where security operations are completely automated.

Threat Intelligence and Visibility for a Proactive Cyber Approach

New forms of ransomware, phishing schemes, and unidentified vulnerabilities in cloud are emerging to exploit the growing attack surface of financial services organisations. Security operations teams in the BFSI sector spend most of their resources dealing with incoming alerts, leaving them with little time to proactively investigate new threats. It is evident that organisations require a partner that has the scale to maintain a data lake of threats identified by a broad range of customers even within the same industry. For greater predictive capabilities, threat intelligence should be based on research carried out on the dark web to improve situational awareness. These insights can help security operations teams to prepare for future attacks. Regular reporting to keep CIOs and CISOs informed of the changing threat landscape can also ease the mind of executives.

To ensure services can be delivered securely, BFSI organisations require additional visibility of traffic on their networks. The ability to not only inspect traffic as it passes through the firewall but to see activity within the network is critical in these increasingly complex environments. Network traffic anomaly detection uses machine learning to recognise typical traffic patterns and generates alerts for abnormal activity, such as privilege escalation or container escape. The growing acceptance of BYOD has also made device visibility more complex. By employing AI and adopting a zero-trust approach, devices can be profiled and granted appropriate access automatically. Network operators gain visibility of unknown devices and can easily enforce policies on a segmented network.

Intelligent Cyber Strategies

Here is what BFSI CISOs should prioritise to build a cyber resilient organisation.

Automation. The volume of incoming threats has grown beyond the capability of human operators to investigate manually. Increase the level of automation in your SOC to minimise the routine burden on the security operations team and allow them to focus on high-risk threats.

Cyberattack simulation exercises. Many security teams are too busy dealing with day-to-day operations to perform simulation exercises. However, they are a vital component of response planning. Organisation-wide exercises – that include security, IT operations, and communications teams – should be conducted regularly.

An AIOps topology map. Identify where you have reliable data sources that could be analysed by AIOps. Then select a domain by assessing the present level of observability and automation, IT skills gap, frequency of threats, and business criticality. As you add additional domains and the system learns, the value you realise from AIOps will grow.

A trusted intelligence partner. Extend your security operations team by working with a partner that can provide threat intelligence unattainable to most individual organisations. Threat intelligence providers can pool insights gathered from a diversity of client engagements and dedicated researchers. By leveraging the experience of a partner, BFSI organisations can better plan for how they will respond to inevitable breaches.

Conclusion

An effective cybersecurity strategy demands a comprehensive approach that incorporates technology, education, and policies while nurturing a culture of security awareness throughout the organisation. CISOs face the daunting task of safeguarding their organisations against relentless cyber intrusion attempts by cybercriminals, who often leverage cutting-edge automated intrusion technologies.

To maintain an advantage over these threats, cybersecurity teams must have access to continuous threat intelligence; automation will be essential in addressing the shortage of security expertise and managing the overwhelming volume and frequency of security events. Collaborating with a specialised partner possessing both scale and experience is often the answer for organisations that want to augment their cybersecurity teams with intelligent, automated agents capable of swiftly

Google recently extended its Generative AI, Bard, to include coding in more than 20 programming languages, including C++, Go, Java, Javascript, and Python. The search giant has been eager to respond to last year’s launch of ChatGPT but as the trusted incumbent, it has naturally been hesitant to move too quickly. The tendency for large language models (LLMs) to produce controversial and erroneous outputs has the potential to tarnish established brands. Google Bard was released in March in the US and the UK as an LLM but lacked the coding ability of OpenAI’s ChatGPT and Microsoft’s Bing Chat.

Bard’s new features include code generation, optimisation, debugging, and explanation. Using natural language processing (NLP), users can explain their requirements to the AI and ask it to generate code that can then be exported to an integrated development environment (IDE) or executed directly in the browser with Google Colab. Similarly, users can request Bard to debug already existing code, explain code snippets, or optimise code to improve performance.

Google continues to refer to Bard as an experiment and highlights that as is the case with generated text, code produced by the AI may not function as expected. Regardless, the new functionality will be useful for both beginner and experienced developers. Those learning to code can use Generative AI to debug and explain their mistakes or write simple programs. More experienced developers can use the tool to perform lower-value work, such as commenting on code, or scaffolding to identify potential problems.

GitHub Copilot X to Face Competition

While the ability for Bard, Bing, and ChatGPT to generate code is one of their most important use cases, developers are now demanding AI directly in their IDEs.

In March, Microsoft made one of its most significant announcements of the year when it demonstrated GitHub Copilot X, which embeds GPT-4 in the development environment. Earlier this year, Microsoft invested $10 billion into OpenAI to add to the $1 billion from 2019, cementing the partnership between the two AI heavyweights. Among other benefits, this agreement makes Azure the exclusive cloud provider to OpenAI and provides Microsoft with the opportunity to enhance its software with AI co-pilots.

Currently, under technical preview, when Copilot X eventually launches, it will integrate into Visual Studio — Microsoft’s IDE. Presented as a sidebar or chat directly in the IDE, Copilot X will be able to generate, explain, and comment on code, debug, write unit tests, and identify vulnerabilities. The “Hey, GitHub” functionality will allow users to chat using voice, suitable for mobile users or more natural interaction on a desktop.

Not to be outdone by its cloud rivals, in April, AWS announced the general availability of what it describes as a real-time AI coding companion. Amazon CodeWhisperer, integrates with a range of IDEs, namely Visual Studio Code, IntelliJ IDEA, CLion, GoLand, WebStorm, Rider, PhpStorm, PyCharm, RubyMine, and DataGrip, or natively in AWS Cloud9 and AWS Lambda console. While the preview worked for Python, Java, JavaScript, TypeScript, and C#, the general release extends support for most languages. Amazon’s key differentiation is that it is available for free to individual users, while GitHub Copilot is currently subscription-based with exceptions only for teachers, students, and maintainers of open-source projects.

The Next Step: Generative AI in Security

The next battleground for Generative AI will be assisting overworked security analysts. Currently, some of the greatest challenges that Security Operations Centres (SOCs) face are being understaffed and overwhelmed with the number of alerts. Security vendors, such as IBM and Securonix, have already deployed automation to reduce alert noise and help analysts prioritise tasks to avoid responding to false threats.

Google recently introduced Sec-PaLM and Microsoft announced Security Copilot, bringing the power of Generative AI to the SOC. These tools will help analysts interact conversationally with their threat management systems and will explain alerts in natural language. How effective these tools will be is yet to be seen, considering hallucinations in security is far riskier than writing an essay with ChatGPT.

The Future of AI Code Generators

Although GitHub Copilot and Amazon CodeWhisperer had already launched with limited feature sets, it was the release of ChatGPT last year that ushered in a new era in AI code generation. There is now a race between the cloud hyperscalers to win over developers and to provide AI that supports other functions, such as security.

Despite fears that AI will replace humans, in their current state it is more likely that they will be used as tools to augment developers. Although AI and automated testing reduce the burden on the already stretched workforce, humans will continue to be in demand to ensure code is secure and satisfies requirements. A likely scenario is that with coding becoming simpler, rather than the number of developers shrinking, the volume and quality of code written will increase. AI will generate a new wave of citizen developers able to work on projects that would previously have been impossible to start. This may, in turn, increase demand for developers to build on these proofs-of-concept.

How the Generative AI landscape evolves over the next year will be interesting. In a recent interview, OpenAI’s founder, Sam Altman, explained that the non-profit model it initially pursued is not feasible, necessitating the launch of a capped-for-profit subsidiary. The company retains its values, however, focusing on advancing AI responsibly and transparently with public consultation. The appearance of Microsoft, Google, and AWS will undoubtedly change the market dynamics and may force OpenAI to at least reconsider its approach once again.